Read more

2Month/20 Hour Price:125,000

120,000

Natural Language Processing with Deep Learning

This course provides an in-depth exploration of Natural Language Processing (NLP) with a focus on using deep learning techniques to process and analyze human language. Through this course, participants will learn how to apply neural networks, including Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM), and Transformers, to solve complex NLP tasks such as text classification, machine translation, sentiment analysis, and more.

Course Objectives:

Understand the core concepts of Natural Language Processing (NLP)Implement deep learning models to solve NLP tasks such as text generation, machine translation, and sentiment analysis

Work with advanced architectures like Transformers and BERT for state-of-the-art NLP solutions

Gain hands-on experience with popular deep learning frameworks like TensorFlow or PyTorch for NLP tasks

Overview of NLP:

Key challenges in NLP: Ambiguity, variability, and context understanding

Fundamentals of NLP

Statistical NLP: N-grams, TF-IDF, and language modeling

Hands-on Lab:

Build a simple text processing pipeline for tokenization and sentiment analysis using Python's NLTK or SpaCy library

Module 2: Word Embeddings and Representation

Vector Representation of Words:

Understanding the mathematics behind word embeddings

Applications of word embeddings in NLP tasks

Contextual Word Embeddings:

How contextual word embeddings improve performance in NLP tasks

Hands-on Lab:

Implement Word2Vec using Gensim to generate word embeddings and explore nearest neighbor

Module 3: Sequence Models for NLP – RNN, LSTM, and GRU

Recurrent Neural Networks (RNNs):

Limitations of RNNs: Vanishing and exploding gradients

Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs):How LSTMs and GRUs solve the vanishing gradient problem

Applications of LSTM/GRU in NLP tasks such as text classification and sequence generation

Hands-on Lab:

Build and train an LSTM model for text generation using TensorFlow/Kera

Module 4: Attention Mechanism and Transformers

How attention helps in aligning source and target in sequence-to-sequence models

Transformers:

Encoder-decoder model and self-attention mechanism

Understanding positional encoding

Applications of Transformers in NLP:

Machine translation, text summarization, and text generation

Hands-on Lab:

Implement a Transformer model using TensorFlow for machine translation

Module 5: Pretrained Language Models – BERT, GPT, and Beyond

Introduction to Pretrained Models:

Overview of BERT, GPT, and T5 architectures

Fine-tuning pretrained models for specific NLP tasks

Bidirectional Encoder Representations from Transformers (BERT):

How to use BERT for text classification, sentiment analysis, and question-answering

Generative Pretrained Transformer (GPT) Models:

Overview of GPT, GPT-2, and GPT-3 for text generation and conversational AI

Hands-on Lab:

Fine-tune a BERT model for sentiment classification using Hugging Face's Transformers library

Text classification for spam detection, product categorization, etc

Machine Translation and Text Summarization:

Question Answering Systems:

Building models for answering questions from text using pretrained models like BERT

Hands-on Lab:

Build a text summarization tool using an attention-based Transformer model

Module 7: Conversational AI and Chatbots

Introduction to Conversational AI:

Dialogue management and intent recognition

Building a Chatbot with Deep Learning:

How to handle multi-turn dialogues and context management

Hands-on Lab:

Build a simple chatbot using GPT and deploy it on a messaging platform

Upon completion of this course, learners can explore various career opportunities in the field of NLP and AI, such as:

NLP Engineer: Develop models for text analysis, summarization, and translationAI Researcher: Contribute to advancing state-of-the-art techniques in NLP and deep learning

Data Scientist: Apply NLP techniques to extract insights from unstructured text data

Chatbot Developer: Build and deploy conversational agents for customer service or virtual assistants

Machine Learning Engineer: Work on integrating deep learning solutions into NLP applications

Job Interview Preparation (Soft Skills Questions & Answers)

Tough Open-Ended Job Interview QuestionsWhat to Wear for Best Job Interview Attire

Job Interview Question- What are You Passionate About?

How to Prepare for a Job Promotion Interview

Stay connected even when you’re apart

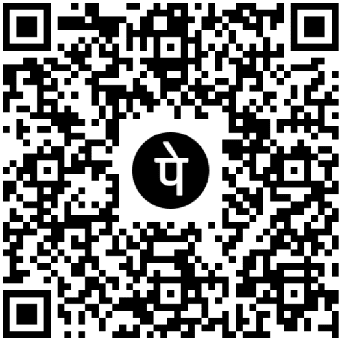

Join our WhatsApp Channel – Get discount offers

500+ Free Certification Exam Practice Question and Answers

Your FREE eLEARNING Courses (Click Here)

Internships, Freelance and Full-Time Work opportunities

Join Internships and Referral Program (click for details)

Work as Freelancer or Full-Time Employee (click for details)

Flexible Class Options

Week End Classes For Professionals SAT | SUNCorporate Group Training Available

Online Classes – Live Virtual Class (L.V.C), Online Training

Related Courses

0 Reviews